Thanks for sharing that. I think it deserves some attention and study

I've built it and am testing it but it's crashing in the prompt with PB I will upload it before I go this evening

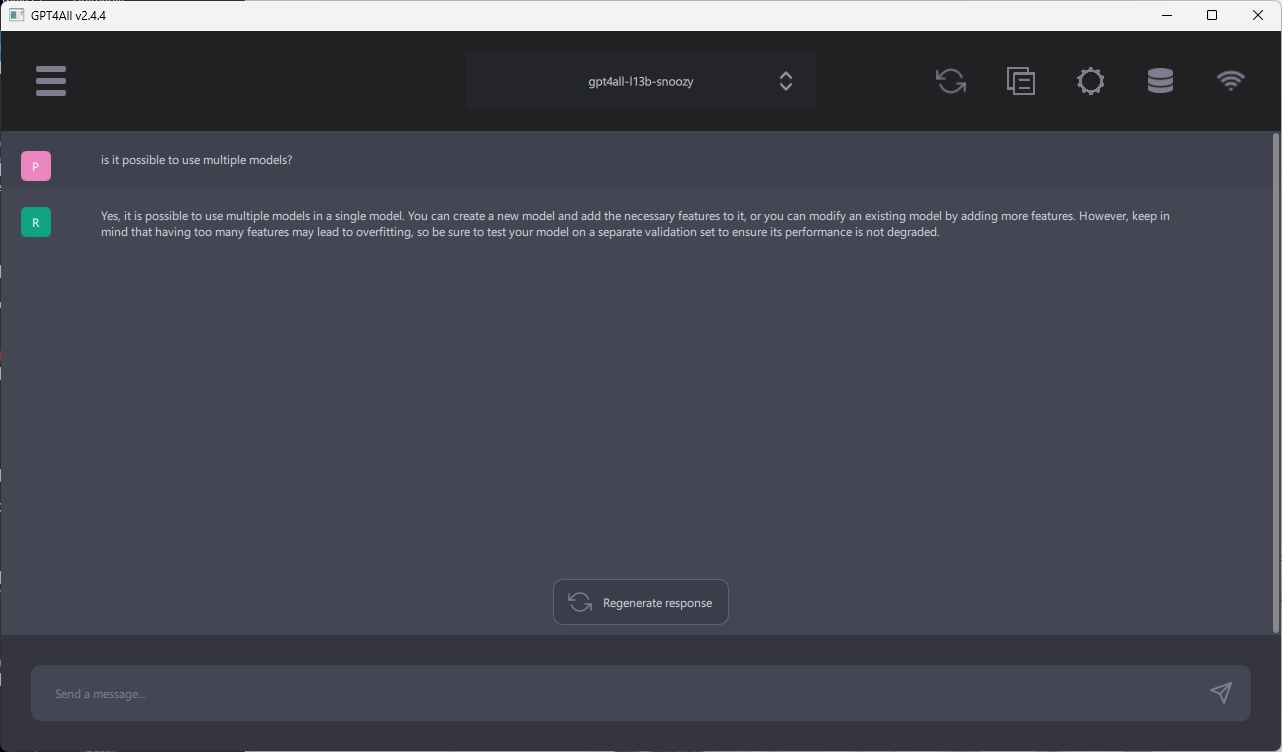

this is what I'm getting before with api

gptj_model_load: loading model from 'C:\Users\idle\AppData\Local\nomic.ai\GPT4All\ggml-gpt4all-j-v1.3-groovy.bin' - please wait ...

gptj_model_load: n_vocab = 50400

gptj_model_load: n_ctx = 2048

gptj_model_load: n_embd = 4096

gptj_model_load: n_head = 16

gptj_model_load: n_layer = 28

gptj_model_load: n_rot = 64

gptj_model_load: f16 = 2

gptj_model_load: ggml ctx size = 5401.45 MB

gptj_model_load: kv self size = 896.00 MB

gptj_model_load: ................................... done

gptj_model_load: model size = 3609.38 MB / num tensors = 285

GGML_ASSERT: C:\Users\idle\gpt4all\gpt4all-backend\llama.cpp-230511\ggml.c:5586: !ggml_is_transposed(a)

If you want to build it on windows its easy but you need git, mingw and cmake

Then in power shell

Code: Select all

git clone --recurse-submodules https://github.com/nomic-ai/gpt4all

cd gpt4all/gpt4all-backend/

mkdir build

cd build

cmake -G "MinGW Makefiles" ..

cmake --build . --parallel

Sure that took me half a day to work out and then it crashed so who knows?

and the import in the zip

Code: Select all

Structure llmodel_error

*message; // Human readable error description; Thread-local; guaranteed to survive until next llmodel C API call

code.l; // errno; 0 if none

EndStructure ;

Structure arFloat

e.f[0]

EndStructure

Structure arLong

e.l[0]

EndStructure

Structure llmodel_prompt_context

*logits.arfloat; // logits of current context

logits_size.i; // the size of the raw logits vector

*tokens.arlong; // current tokens in the context window

tokens_size.i; // the size of the raw tokens vector

n_past.l; // number of tokens in past conversation

n_ctx.l; // number of tokens possible in context window

n_predict.l; // number of tokens to predict

top_k.l; // top k logits to sample from

top_p.f; // nucleus sampling probability threshold

temp.f; // temperature to adjust model's output distribution

n_batch.l; // number of predictions to generate in parallel

repeat_penalty.f; // penalty factor for repeated tokens

repeat_last_n.f; // last n tokens to penalize

context_erase.f; // percent of context to erase if we exceed the context window

EndStructure

PrototypeC llmodel_prompt_callback(token_id.l);

PrototypeC llmodel_response_callback(token_id.l,*response);

PrototypeC llmodel_recalculate_callback(is_recalculating.l) ;

ImportC "libllmodel.dll.a"

llmodel_model_create(model_path.p-utf8);

llmodel_model_create2(model_path.p-utf8,build_variant.p-utf8,*error.llmodel_error);

llmodel_model_destroy(model.i) ;

llmodel_loadModel(model.i,model_path.p-utf8) ;

llmodel_isModelLoaded(model.i) ;

llmodel_get_state_size(model.i) ;

llmodel_save_state_data(model.i,*dest.Ascii) ;

llmodel_restore_state_data(model.i,*src.Ascii) ;

llmodel_prompt(model,prompt.p-utf8,*prompt_callback,*response_callback,*recalculate_callback,*ctx.llmodel_prompt_context); *prompt.p-utf8

llmodel_setThreadCount(model,n_threads.l) ;

llmodel_threadCount.l(model.i) ;

llmodel_set_implementation_search_path(path.p-utf8) ;

llmodel_get_implementation_search_path() ; return string peeks

EndImport

Global ctx.llmodel_prompt_context

Global err.llmodel_error

OpenConsole()

ProcedureC CBResponse(token_id.l,*response);

PrintN("CB Resposne")

ProcedureReturn #True

EndProcedure

ProcedureC CBPrompt(token.l)

PrintN("CB Prompt")

ProcedureReturn #True

EndProcedure

ProcedureC CBRecalc(is_recalculating.l)

PrintN("Is Calc")

ProcedureReturn #True

EndProcedure

path.s = "C:\Users\idle\AppData\Local\nomic.ai\GPT4All\ggml-gpt4all-j-v1.3-groovy.bin"

model = llmodel_model_create2(path,"avxonly",*err);

If model

llmodel_setThreadCount(model,12)

If llmodel_loadModel(model,path)

prompt.s = "hello"

PrintN(prompt)

x = llmodel_prompt(model,prompt,@CBPrompt(),@CBResponse(),@CBRecalc(),@ctx);

PrintN("after prompt")

Input()

llmodel_model_destroy(model)

EndIf

EndIf

Here's the binary build extract contents to the bin folder "C:\Users\idle\gpt4all\gpt4all-backend\build\bin

I also installed gpt4all in c:\users\idle\gtp4all

https://dnscope.io/idlefiles/gpt4all_pb.zip