Actually this gives good results (Mac OS X and Windows only - may be completely different on Linux):

Code: Select all

Procedure.d ScreenPoint2PixelsY(points.d)

CompilerIf #PB_Compiler_OS = #PB_OS_Windows

Protected hDC = GetDC_( GetDesktopWindow_() )

Protected dpiY = GetDeviceCaps_(hDC, #LOGPIXELSY)

ReleaseDC_(GetDesktopWindow_(), hDC)

ProcedureReturn (72.0 / dpiY) * points

CompilerElse

ProcedureReturn points

CompilerEndIf

EndProcedure

LoadFont(0, "Arial", ScreenPoint2PixelsY(48), #PB_Font_HighQuality)

CreateImage(0, 400, 100, 24, $FFFFFF)

StartDrawing(ImageOutput(0))

DrawingFont(FontID(0))

DrawingMode(#PB_2DDrawing_Transparent)

DrawText(0, 0, "Arial 48!", $FF0000)

Debug TextHeight("X")

StopDrawing()

ShowLibraryViewer("Image",0)

CallDebugger

;UsePNGImageEncoder()

;SaveImage(0, "test_mac.png", #PB_ImagePlugin_PNG)

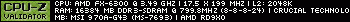

Output for size 48:

- Windows: 55

- Mac: 54

Output for size 148:

- Windows: 165

- Mac: 166

On Windows the font size seems to be in Windows "Points" (device independent).

On Mac OS X the font size seems to be in plain Pixels.

@ts-soft:

On a screen a 'Dot' means a pixel, so it is the same. My screen can display 96 pixels per inch (PPI), and

my printer can display 1200 dots per inch (DPI). It is the same thing, how many real "points" is a device

able to display. Display is 96 DPI/PPI, Printer is 1200 DPI/PPI.

PureBasic's LoadFont() size is indeed very much different on different platforms, and that's not good.

In my opinion it would be better if LoadFont() would always take accurate pixels high, and functions like

GetDPIX() and GetDPIY() would be provided. In this case we would just know the DPI for screens, sprites,

printers, etc...